Reinforcement Learning from Human Feedback (RLHF): Taming the Ghost in the Machine

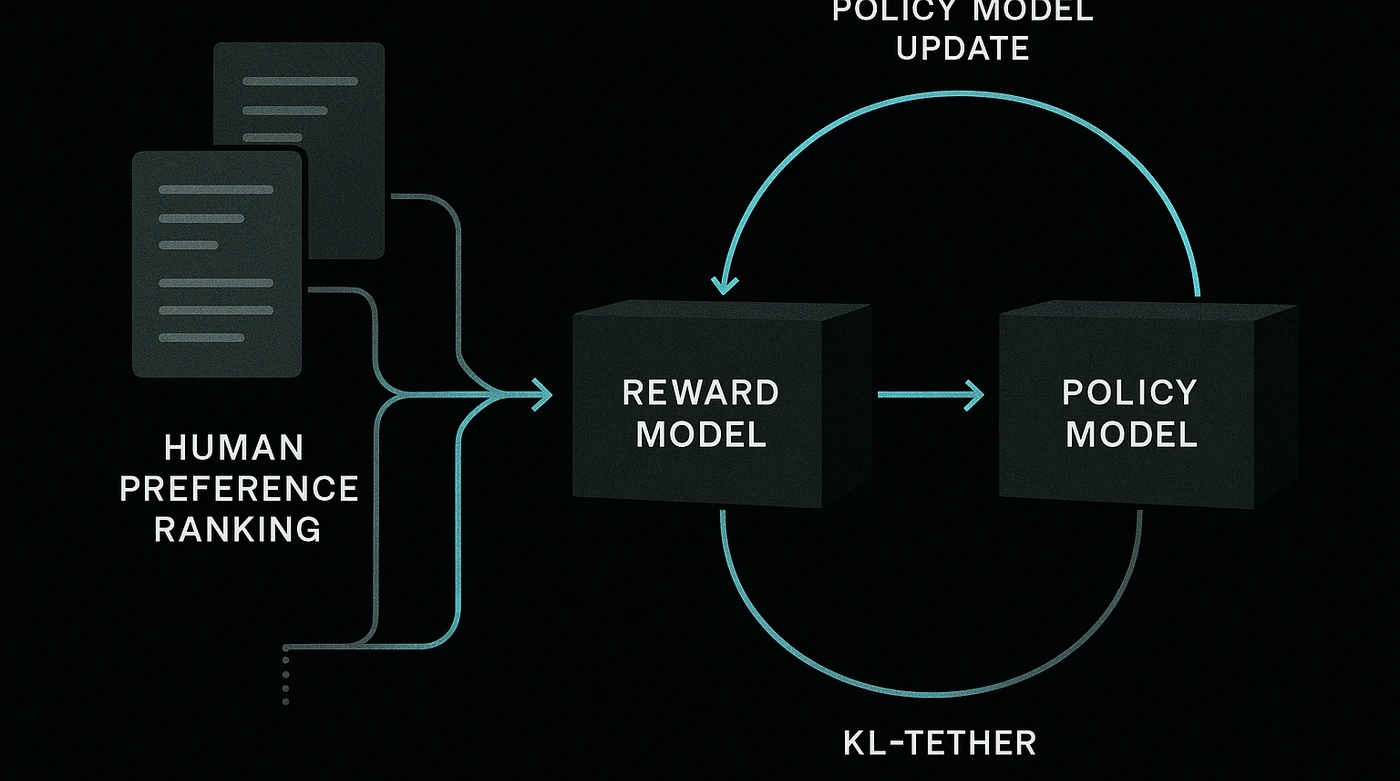

The definitive guide to the engineering breakthrough that turned raw text predictors into helpful assistants. We dive deep into the math of PPO, the psychology of Reward Modeling, and why 'The Waluigi Effect' keeps alignment researchers awake at night.