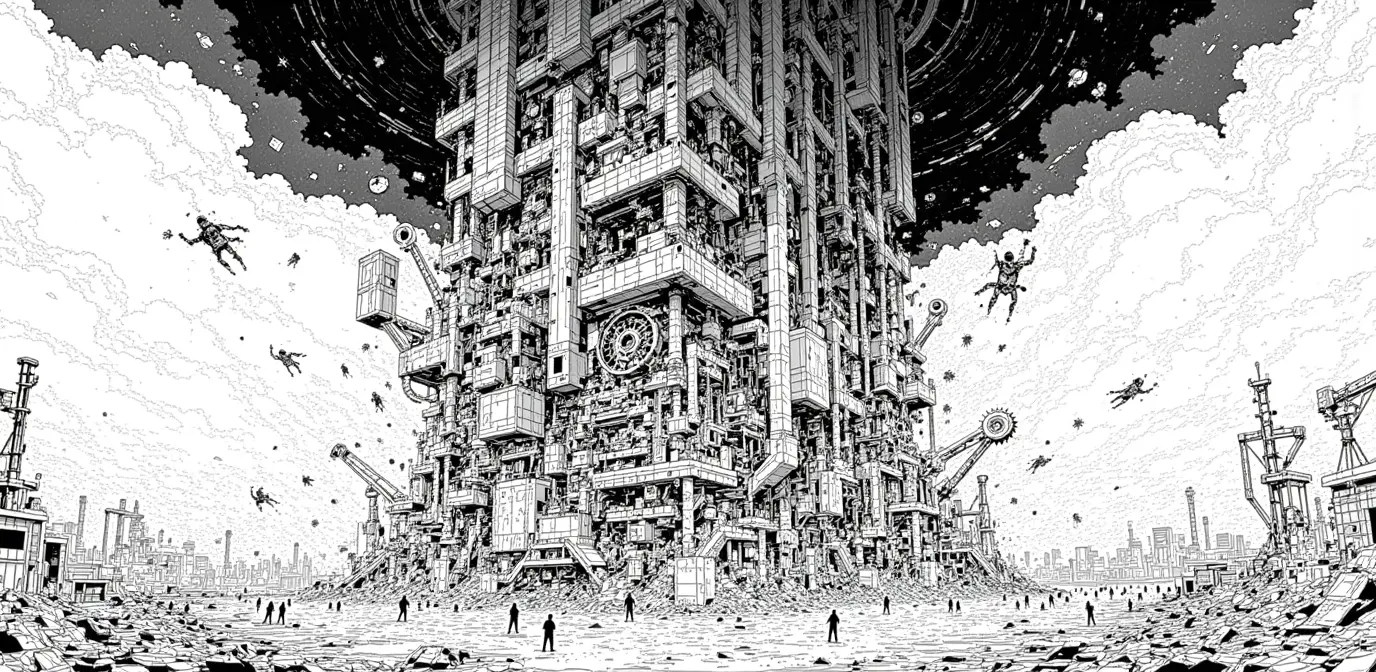

Is the AI Boom Sustainable or a Bubble About to Burst?

Taiwan's AI-driven surge is real, but software teams still need a hard-nosed plan for what happens if demand cools after the infrastructure buildout peak.

74 articles in this category.

Taiwan's AI-driven surge is real, but software teams still need a hard-nosed plan for what happens if demand cools after the infrastructure buildout peak.

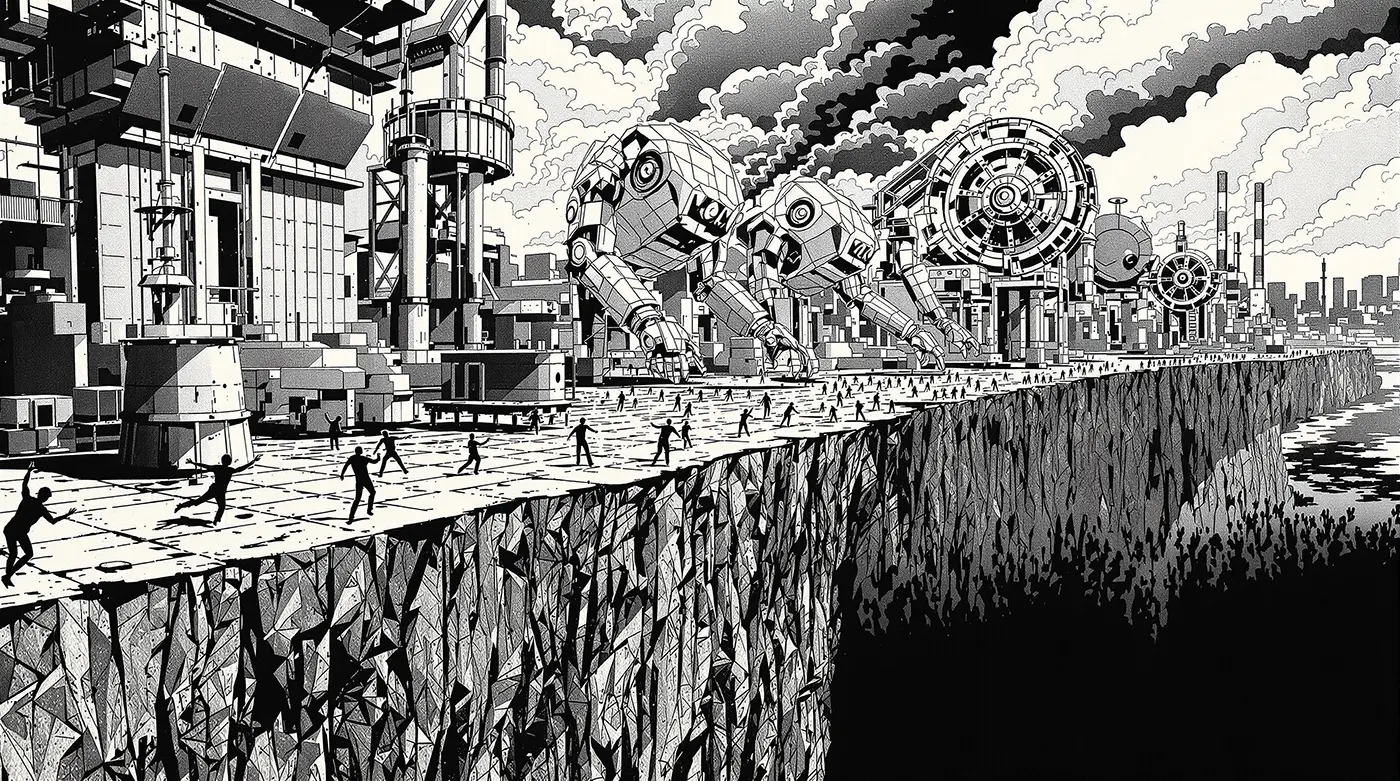

Cloud-scale AI will keep growing, but Taiwan's strongest software opportunity may be edge-first systems that combine local chips, lightweight models, and workflow-specific reliability.

Taiwan's CES 2026 pivot from component supplier to systems exporter is credible, but software companies need productization discipline to turn hardware excellence into off-the-shelf global solutions.

Taiwan's 2026 Agentic AI policy shift is clear: teams that only classify and predict will lose ground to teams that can plan, execute, verify, and recover under real workflow constraints.

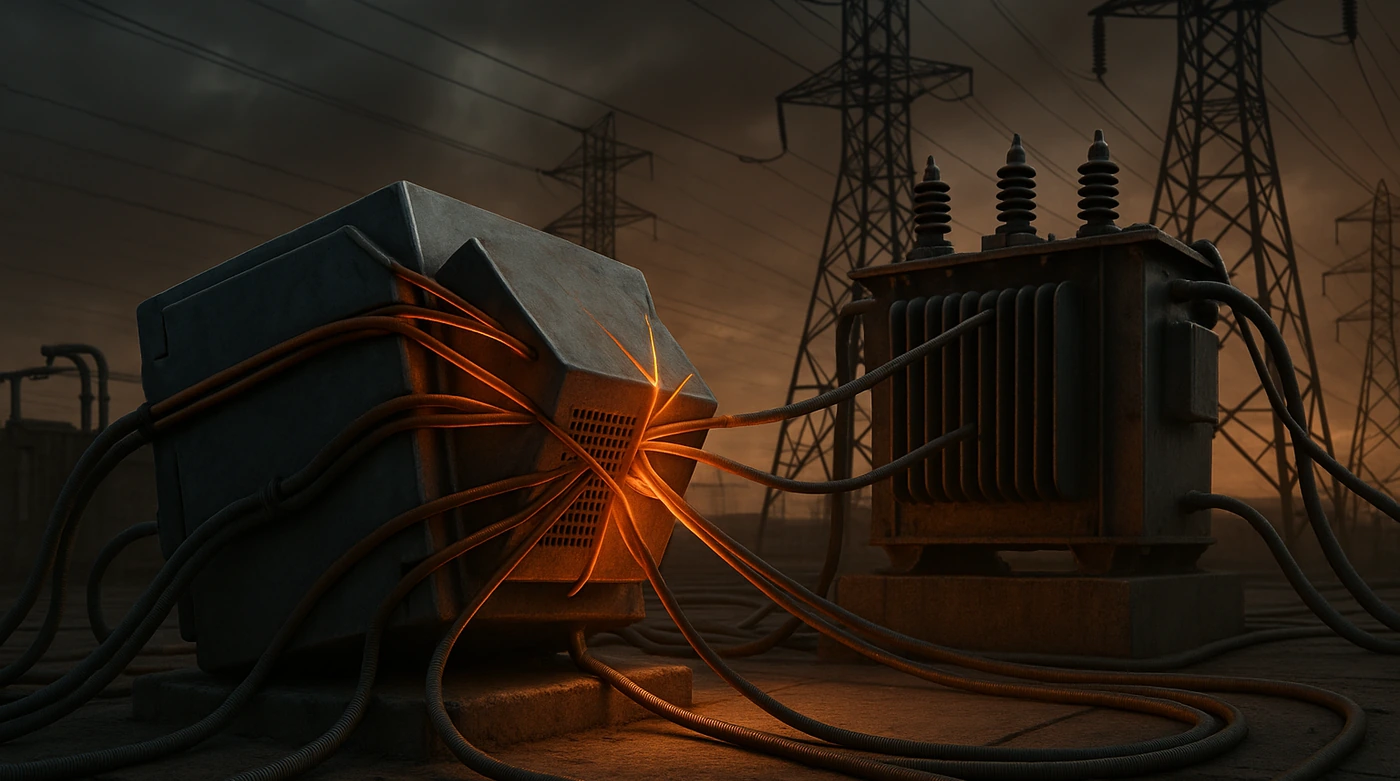

As power constraints, hardware depreciation risk, and talent bottlenecks sharpen in 2026, software teams need a resilience-first operating model that outlives temporary infrastructure booms.

As semiconductor reshoring accelerates and trade relationships rebalance in 2026, software companies need a portfolio strategy that captures U.S. growth without blindly abandoning China-linked revenue pathways.

If AI can dominate Go and chess, why has Taiwan-style 16-tile mahjong not produced a public, superhuman breakthrough model? The answer is not one missing algorithm. It is an ecosystem problem with technical, data, and incentive bottlenecks.

The market has shifted from AI assistants to AI agents with real permissions. With OpenClaw, Moltbook, OpenAI Frontier, and Claude Opus 4.6 all accelerating in early 2026, the key question is no longer capability. It is operational readiness and control.

Pure model scaling is no longer the whole story. A practical map of where the next serious gains are coming from: inference-time compute, retrieval design, tool integration, and human-in-the-loop systems.

A practical playbook for building reliable AI agents in ports, logistics, and compliance-heavy environments where failure is expensive and chat UX is irrelevant.

What Duolingo's last two years reveal about AI-first strategy: massive upside in speed and scale, but real downside when execution looks like replacement instead of augmentation.

AI value in 2026 comes from shared platforms, clear ownership, and enforceable governance. A practical guide to AI factories, organizational design, and building systems that can survive regulatory change.

2026 looks less like another pilot year and more like an execution year for maritime AI. The shift is not about bigger models. It is about measurable ROI from digital twins, unified monitoring, edge retrofits, and agentic operations.

A practical deep dive on open-weight reasoning models in 2026: definitions, architecture patterns, strengths, risks, and how to decide when open weights beat closed APIs.

OpenAI announced ad testing in ChatGPT's free tier this week. That decision changes the economics of consumer AI and reopens hard questions about trust, neutrality, and response influence in ad-supported systems.

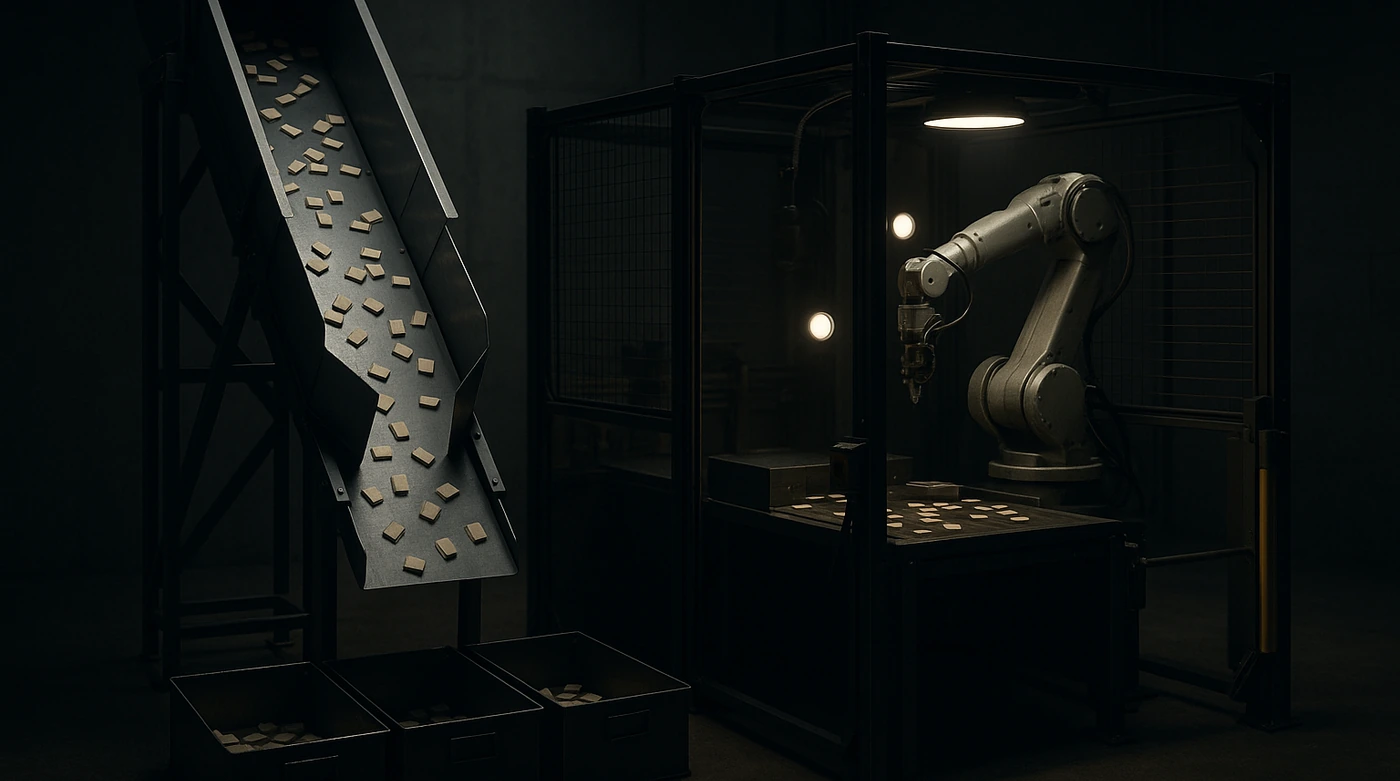

Physical AI is back because the value is tangible. A practical guide to connecting LLM and agent stacks with sensing and actuation, including failure modes, safety economics, and rollout patterns.

As of February 2026, AI can accelerate execution but still cannot own context, culture, brand judgment, or creative direction. A practical guide to where human designers still lead.

If your AI product touches customer data, subprocessors are part of your architecture whether you planned for them or not. In 2026, understanding subprocessor agreements is no longer legal trivia - it is operational competence.

AI transparency is no longer a future compliance problem. In 2026 it is active operational work, with real obligations in the EU and U.S. states and an increasingly clear direction of travel for technical teams.

Consistency isn't just for characters. Learn how to create persistent 3D-consistent rooms and landscapes that your characters can inhabit.

The practical shift in 2026 is not just better model outputs. It is LLMs acting as controllers over files, tools, and workflows, and that same control pattern now shows up in physical AI and robotics.

A practical 2026 deep dive into how LLMs moved from parameter races to production architecture: RAG-first systems, usable long context, multimodality, agent workflows, and hybrid deployment.

A practical guide to designing open-domain AI systems with one concrete port-compliance case, failure containment patterns, and a production-grade evaluation workflow.

OpenAI's January 14, 2026 Cerebras partnership is not an isolated headline. It fits a broader multi-vendor compute strategy that points to a post-monoculture AI stack where non-NVIDIA options become strategically essential.

Hybrid quantum-classical architecture has moved from theory to serious infrastructure work. Here is what that means in 2026 for everyday people, enterprise teams, and creative practitioners without the hype fog.

The 2026 pivot to slim language models is not a downgrade. It is a maturity move: tighter domain tuning, lower latency, lower cost, and often better operational reliability than oversized general stacks.

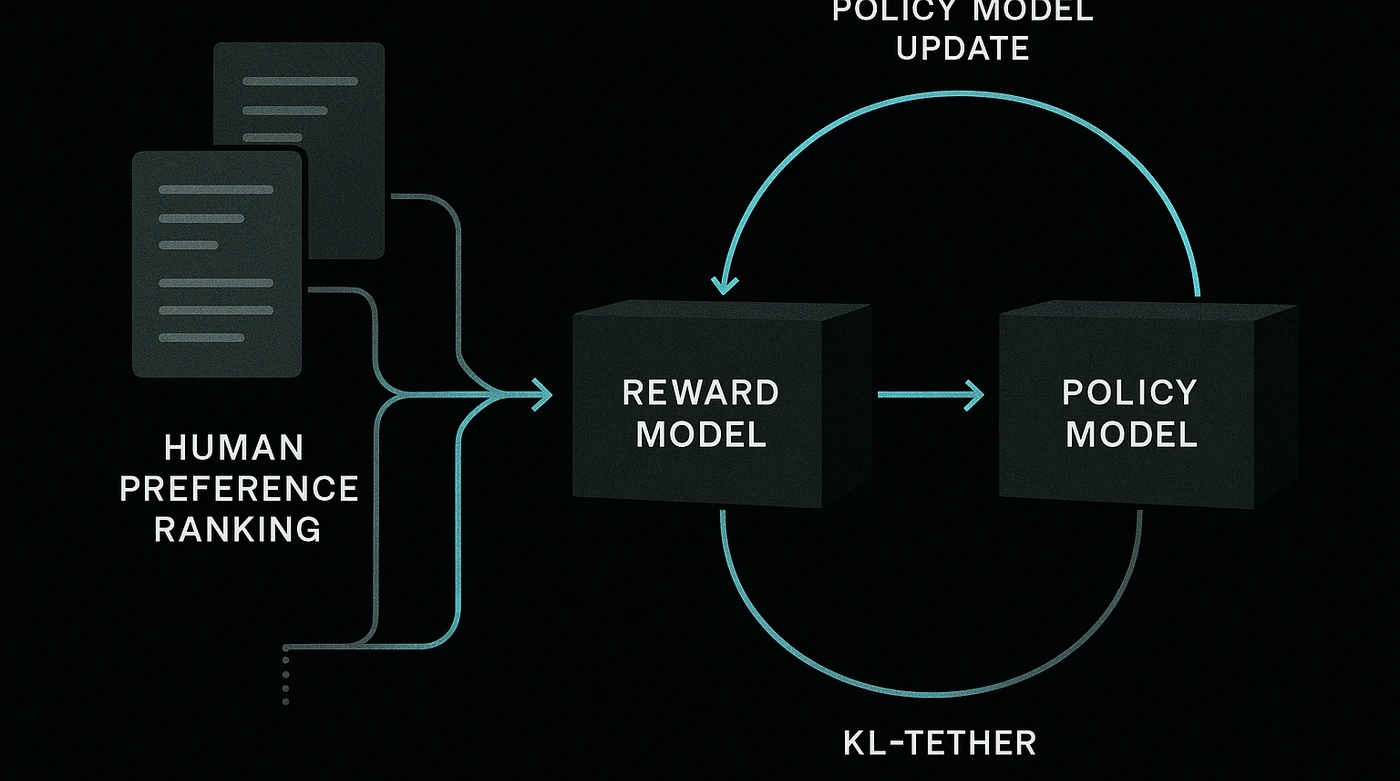

The definitive guide to the engineering breakthrough that turned raw text predictors into helpful assistants. We dive deep into the math of PPO, the psychology of Reward Modeling, and why 'The Waluigi Effect' keeps alignment researchers awake at night.

As AI coding agents proliferate and 'vibe coding' becomes mainstream, a crucial question emerges: who will possess the deep technical knowledge to architect systems, debug complex issues, and mentor the next generation?

Stop generating static portraits. Learn how to use ControlNet and openpose to force your AI characters into dynamic, action-packed scenes.

Learn how to use character reference sheets and grid layouts to maintain consistent facial features and persona elements across multiple AI-generated images.

AI images often look 'too perfect'. Learn how to prompt for natural skin texture, imperfect lighting, and candid moments to escape the 'plastic' look.

Taiwan's 99% SME economy faces a document processing paradox. What I've learned about transforming manual workflows into intelligent systems-and why the technical solution is only half the story.

Master the key parameters controlling image generation quality and performance. Learn optimal settings for inference steps, CFG scale, and samplers with practical examples and performance benchmarks.

How to train or prompt a consistent artistic style that works across portraits, landscapes, and objects to create a unified brand identity.

Discover how Grouped Query Attention became the secret weapon behind 1M+ token context windows in 2025's flagship models, enabling massive scaling without exploding memory costs.

Stop generating random snapshots. Learn how to control camera angles, focal lengths, and composition to direct your AI images like a cinematographer.

Discover the fascinating process behind how transformers predict text, from tokenization to probability distributions, demystifying the core mechanism that powers modern AI.

Discover how Mixture of Experts became the secret to trillion-parameter models in 2025, enabling massive AI scaling while using only a fraction of the compute through revolutionary sparse activation.

Explore the vast landscape of AI techniques beyond LLMs, from computer vision to reinforcement learning, and discover how these technologies integrate to create powerful intelligent systems.

The development of multimodal AI agents marks a pivotal step toward creating systems capable of understanding, reasoning, and interacting with the world in human-like ways. This article explores the journey toward integrated intelligence that can see, talk, and act, examining the technical challenges, scaling considerations, and economic realities of building truly multimodal AI systems.

A comprehensive guide to understanding AI model versioning systems, their implications for developers and businesses, and strategies for maintaining stability in a rapidly evolving landscape.

While AI threatens to eliminate millions of jobs, the Jevons paradox suggests a counterintuitive outcome: increased efficiency often leads to expanded consumption. This article explores how AI will transform rather than eliminate human work, creating entirely new job categories while reshaping our understanding of productivity and value.

An exploration of GPU architecture evolution from simple graphics processors to computational powerhouses, examining the shift from high-precision floating point to specialized matrix units and how this transformation is reshaping computing across scientific, creative, and AI domains.

Explore how large language models create a compelling illusion of thought through pattern matching and statistical prediction, despite lacking true understanding or consciousness.

An exploration of how AI systems undergo silent transformations beneath the surface, creating an unstable environment for businesses that depend on them, and the transparency challenges this presents for organizations integrating AI into critical operations.

Beyond algorithms and datasets, successful computer vision requires understanding the complex interaction between light, sensors, mathematics, and human perception. This guide explores the essential knowledge practitioners need to bridge the gap between demos and production-ready systems.

AI models often learn unintended shortcuts rather than meaningful patterns. This article explores five powerful countermeasures to address the hidden curriculum problem in AI training, ensuring models develop true understanding instead of exploiting statistical correlations.

Despite fears of AI replacing human jobs, evidence suggests a more nuanced reality: as artificial intelligence automates routine tasks, it simultaneously elevates the value of uniquely human capabilities. This transformation isn't eliminating our relevance—it's revealing what makes us truly irreplaceable.

Understanding AI isn't just about algorithms and data—it requires an interdisciplinary approach drawing from cognitive science, philosophy, complex systems theory, and more. This guide reveals how combining perspectives from diverse fields can help unravel the mysteries of AI behavior.

Discover the fascinating process behind how large language models learn from data, the challenges involved in training them, and why high-quality training data is becoming increasingly scarce.

Explore the challenges of balancing real-time responsiveness and latency in large language models, and discover the techniques used to optimize LLM performance for time-sensitive applications.

Explore why large language models struggle with complex questions, and learn practical strategies to help you achieve better results when asking sophisticated queries.

Discover how large language models track and resolve references in text, a crucial capability that enables more coherent conversations and a deeper understanding of complex documents.

A detailed guide on what tokens are, how they work in LLMs, and why they matter for anyone using AI language models.

Explore the origins and impacts of bias in large language models, and learn about the strategies researchers use to create more fair and inclusive AI systems.

Modern LLMs go far beyond simple next-word prediction. Discover how transformers, multimodal inputs, and in-context learning redefine what AI can understand and generate.

Explore how overfitting affects large language models, why it happens, and the techniques used to prevent models from memorizing rather than generalizing from training data.

Explore the different approaches that define how large language models learn, from supervised learning to reinforcement learning from human feedback (RLHF), and understand how each method shapes AI behavior.

Explore how sparse attention techniques allow large language models to process longer inputs more efficiently by focusing only on the most relevant relationships between tokens.

Explore the fascinating mechanisms that enable large language models to understand and process lengthy documents, from attention mechanisms to chunking strategies.

Unravel the mystery of how language models track and maintain context in conversations. Learn about contextual embeddings, reference resolution, and other techniques that enable coherent and relevant responses.

Compare the advantages and limitations of open-source and proprietary LLMs, examining real-world examples like Llama, Mistral, and GPT-4 to understand which approach best fits different use cases.

Understand the computational challenge that makes large language models struggle with longer inputs, and learn about the innovative solutions being developed to overcome this limitation.

Explore how large language models attempt to reason, the surprising capabilities they've demonstrated, and the fundamental limitations that still separate them from human-like thinking.

Learn why complex, multi-layered questions often confuse even advanced AI models, and discover practical strategies for crafting better prompts that get you the answers you need.

Explore the challenges of working with limited context windows in large language models, and learn effective strategies for optimizing your inputs when facing memory constraints.

Discover how memory-enhanced transformers are revolutionizing AI by giving language models a persistent 'notebook' to retain information over time, enabling more coherent long-form interactions.

Dive into the revolutionary architecture that powers today's large language models, understanding how transformers process information and why they've become the foundation of modern AI.

Explore the fundamental architectural differences between dense models like GPT-4 and experts-based models like Switch Transformer, and learn where each approach excels.

Discover how fine-tuning transforms generic language models into specialized tools for specific domains, and learn the practical approaches to implement this powerful technique.

Explore how multimodal LLMs integrate text, images, audio, and video, revolutionizing AI's ability to understand and interact with different types of data.

Exploring the principles behind AI scaling laws and why the future of AI might not just be about building bigger models, but smarter and more efficient ones.

Dive into how attention mechanisms enable LLMs to focus on relevant information in text. Learn about self-attention, multi-head attention, and how they contribute to the remarkable capabilities of modern language models.

A comprehensive guide to understanding hallucinations in large language models, including their causes, examples, and practical strategies to mitigate them.